Your blueprints look perfect. Now, how fast can you make them look real?

For years, photorealistic visuals for industries like real estate, automotive, and eCommerce were a major bottleneck. Turning a simple design sketch or a floor plan into a stunning, high-resolution (HD) image took days, required huge computing power, and cost a fortune. Early AI models tried, but their results looked blurry and small, not fit for commercial use.

The game changed with one breakthrough: Pix2PixHD. This advanced system didn’t just clean up blurry images; it mastered the art of generating 2048×1024 pixel photorealism from simple inputs.

If you need commercial-grade visuals at scale, this is the technology you need to understand. Keep reading to see the architecture that powers this stunning leap forward.

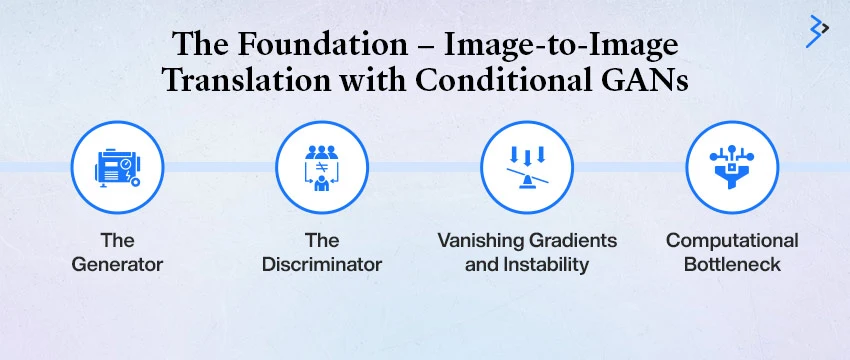

Section 1 | The Foundation – Image-to-Image Translation with Conditional GANs

To appreciate the “HD” achievement, we must first understand the foundation: Image-to-Image Translation using Conditional Generative Adversarial Networks (cGANs).

A cGAN consists of two competing neural networks:

- The Generator (G): It generates synthetic images from an input condition (such as a semantic label map, a sketch, or an edge detection image).

- The Discriminator (D): It acts as a harsh critic, trying to distinguish the generator’s synthetic output from a real, ground-truth photograph.

The generator creates street view images from segmented city maps, illustrating “conditional” training. They compete in a zero-sum game, with the generator attempting to deceive the Discriminator, which seeks to spot the fakes.

The initial Pix2Pix demonstrated this beautifully: feed it a rough drawing of a handbag, and it generates a faux-photograph of a handbag. However, traditional cGANs struggled immensely when scaled to high resolutions due to two major hurdles:

- Vanishing Gradients and Instability: Training large GANs can lead to significant instability, often resulting in “mode collapse.” This phenomenon occurs when the generator produces only a narrow range of images, limiting the diversity of outputs.

- Computational Bottleneck: Processing image tensors with resolutions of 1024×512 or 2048×1024 demands substantial computational resources. This high demand often leads to failures during the sequential upsampling process, resulting in noticeable blurriness.

The breakthrough occurred not in the concept, but in the architecture.

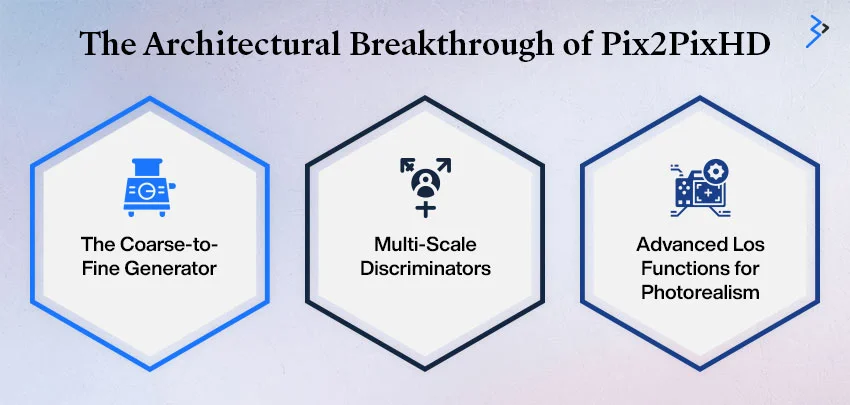

Section 2 | The Architectural Breakthrough of Pix2PixHD

Pix2PixHD’s ability to stabilize training and generate stunning detail relies on three critical architectural innovations that transform how the image is processed and critiqued.

01 | The Coarse-to-Fine Generator

Instead of a single, monolithic network struggling to process high-resolution inputs, Pix2PixHD uses a Cascaded Generator structure that operates in two stages:

- Global Generator (G1): This acts as the “coarse” network. It takes the original input map (e.g., 1024×512) and produces a low-resolution, but structurally consistent, output image. This network learns the global composition (where the buildings, roads, and sky should be).

- Local Enhancer (G2): The network enhances specific areas with high resolution. It takes the high-resolution input map and the output features from the Global Generator. Focusing on a local area with global context adds intricate details, like brick patterns and reflections, without increasing computational complexity.

This hierarchical approach ensures that the output is coherent both globally and locally.

02 | Multi-Scale Discriminators (The Expert Critics)

Traditional GANs use a single Discriminator that often misses minor imperfections in HD images, like blurriness on distant objects.

Pix2PixHD employs a pyramid of three Multi-Scale Discriminators (D1, D2, D3).

- D1: Operates on the original, full-resolution image (2048×1024).

- D2: Operates on the image downsampled by a factor of two.

- D3: Operates on the image downsampled by a factor of four.

All three Discriminators share the same network structure but operate at different scales. The requirement compels the generator to create realistic features at every level of detail, ranging from global composition to individual pixel textures. This innovation is key to eliminating the “blurriness” that plagued earlier GANs.

03 | Advanced Loss Functions for Photorealism

Pix2PixHD introduced a novel adversarial learning objective, incorporating a Feature Matching Loss (LFM). Feature Matching Loss compares Discriminator feature representations instead of penalizing the generator for incorrect pixels (L1 or L2 loss).

The generator must deceive the Discriminator with the final output and engage its intermediate layers. This approach enhances photorealism and texture synthesis.

Section 3 | The Business Impact – Applications in the Visual Economy

Converting abstract inputs into high-resolution, photorealistic outputs has significant commercial implications in visual markets.

An open‑source release page by NVIDIA confirms that pix2pixHD is available for commercial use under a BSD licence, meaning enterprises could deploy it in closed‑source commercial projects.

Fortune Business Insights projects that the global AI image generator market will grow from $299.30 million in 2023 to $917.45 million by 2030, achieving a CAGR of 17.4%.

Here are the critical enterprise applications powered by Pix2PixHD:

01 | Real Estate and Architectural Visualization

Pix2PixHD instantly converts CAD models and floor plans into photo-realistic renderings, eliminating the need for physical construction.

- Benefit: Enables rapid iteration of design options and the creation of unlimited virtual staging environments, accelerating sales cycles.

02 | eCommerce and Virtual Photography

The eCommerce development services industry constantly needs unique, personalized, and context-specific product images. Instead of costly photoshoots, retailers can use Pix2PixHD to transform a single 3D product model into countless scenarios.

- Example: Using semantic background maps, a shoe manufacturer can quickly create HD mockups of shoes on different backgrounds, like cobblestone streets or beaches. The process significantly speeds up the time needed to launch new visual campaigns.

03 | Data Augmentation and Simulation

One of the most valuable, non-obvious applications is creating synthetic training data for other AI systems, particularly in autonomous vehicle (AV) development. AVs require millions of varied driving scenarios, from snowstorms to nighttime rain.

- Process: Developers use Pix2PixHD to convert clear driving footage into visually realistic, challenging scenarios, such as changing a sunny map into a foggy one. Synthetic data is essential as it overcomes the lack of high-quality annotated datasets by generating limitless, automatically labeled training data.

04 | Interactive and Immersive Content (AR/VR)

The ability of Pix2PixHD to handle semantic manipulation in HD opens doors for interactive design. Users can adjust a semantic label map (e.g., changing a park area to a parking lot) and see the photorealistic result instantaneously. This is vital for creating highly responsive and detailed augmented reality app development (AR) or virtual reality (VR) environments.

Section 4 | Implementing Pix2PixHD – Challenges and Strategic Partnership

While the capability of Pix2PixHD is immense, its implementation in an enterprise environment presents specific challenges:

1. Computational Requirements

Pix2PixHD requires significant computational horsepower, particularly for the multi-scale Discriminators and high-resolution training. Training a large-scale model often necessitates specialized GPU hardware with large memory capacity (e.g., 11GB+ NVIDIA GPUs).

2. Data Curation

Although the model is highly effective, it requires large datasets of meticulously paired images (input map to output photo) for practical training. Curation and annotation of this data are often the most time-consuming steps.

3. Expertise in Loss Function Customization

Optimal results in a business domain require customized loss functions that balance adversarial, feature matching, and perceptual losses.

Overcoming these barriers requires specialized AI engineering expertise. Strategic partners must navigate high-demand infrastructure, manage data pipelines, and customize architecture to achieve business outcomes.

Conclusion: The Future of Visual Reality is Generative

Pix2PixHD is a significant advancement in AI technology that enables creating high-quality, realistic images. It uses an advanced method that improves image quality and stability, addressing common issues in previous AI models. Pix2PixHD helps businesses with visual content, like marketing and design, lower costs, and create personalized visuals faster.

What makes using this technology more effective is specialized knowledge and experience. Brainvire develops and implements advanced AI models like Pix2PixHD, ensuring seamless integration with your existing systems. Partnering with Brainvire helps your organization harness the benefits of high-quality, AI-generated visuals.

FAQs

Older Generative Adversarial Networks (GANs) had trouble producing clear images larger than 256×256 pixels, often resulting in blurriness. Pix2PixHD improved this with a multi-scale design that combines a cascaded generator and multiple discriminators, helping it create detailed, high-resolution images up to 2048×1024 pixels.

A semantic label map colors each pixel to represent its corresponding object; for example, it uses blue for “road” and green for “tree.” This map guides a program called cGAN, specifically the Pix2PixHD model, to generate realistic images based on the layout, enabling impressive image creation and modification.

Pix2PixHD is a tool that transforms images from one type to another by learning from pairs of images. Based on its training, it can create outlines, colorize black-and-white photos, or enhance low-resolution images.

Pix2PixHD requires a lot of memory for training due to its large data structures and multiple networks (one Generator and three Discriminators). It typically needs powerful NVIDIA GPUs like the A100 or V100, which have at least 11GB of memory. For businesses, using cloud-based GPU services is often the most affordable option.

Related Articles

-

Top Logo Design Tools to Elevate Your Brand Identity in 2025

Introduction Creating a unique and professional logo is essential for any business or individual looking to build a strong brand identity. A logo is often the first thing people notice,

-

Crafting Perfection: AI’s Impact on the Jewelry Industry

The jewelry industry has long been revered for its artistry and attention to detail in the world of luxury and craftsmanship. However, as technology continues to advance, artificial intelligence (AI)

-

Cross-Functional Transformations: How AI Contract Management Unlocks Value for Sales, Procurement, and Finance

Contracts are the heartbeat of every business, yet they often get trapped in silos. A deal might start in sales, stall during legal reviews, and disappear into storage until finance