Artificial intelligence (AI) has transformed numerous industries, from customer service to data analysis. However, the effectiveness of AI models depends mainly on their adaptability and accuracy. Custom Large Language Models (LLMs) allow businesses to optimize AI performance for specific applications, ensuring improved response quality, efficiency, and cost savings. Fine-tuning AI models, particularly OpenAI’s text generation models, is crucial in this customization process. This guide explores custom LLMs, their benefits, implementation strategies, and real-world applications.

Understanding Custom LLMs

Custom LLMs are pre-trained language models refined using specialized datasets to align with a company’s unique requirements. While general-purpose AI models offer versatility, custom LLMs provide domain-specific expertise, enhanced accuracy, and better alignment with business objectives.

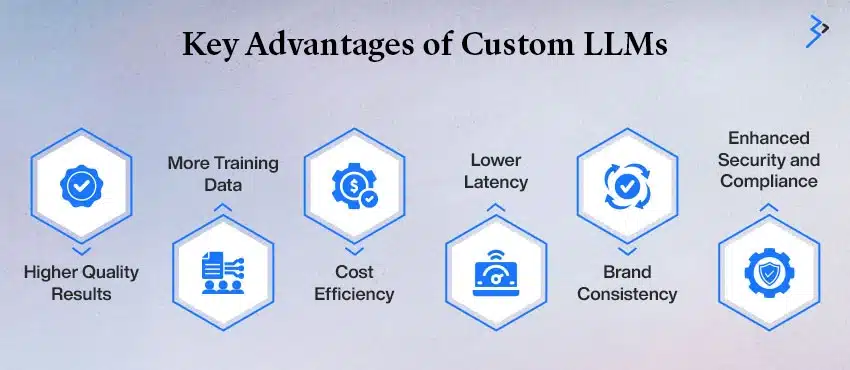

Key Advantages of Custom LLMs

- Higher Quality Results

Custom LLMs go beyond standard prompt engineering by significantly improving response accuracy and contextual understanding. By training on domain-specific data, these models better grasp industry jargon, nuanced inquiries, and complex instructions than generic AI models. This leads to more relevant, precise, and insightful responses tailored to business needs.

- More Training Data

Unlike few-shot learning, which relies on limited examples provided within a prompt, fine-tuned custom LLMs benefit from large-scale training datasets. This allows them to internalize patterns, best practices, and structured responses from thousands of data points, ensuring a deeper understanding of the subject matter and reducing reliance on lengthy prompts.

- Cost Efficiency

Custom LLMs help reduce operational costs by allowing shorter, more concise prompts while maintaining high response quality. By fine-tuning the model with relevant industry data, businesses can avoid excessive token usage, leading to lower API costs in the long run. This efficiency is particularly beneficial for organizations handling high query volumes.

- Lower Latency

Optimized models process requests faster, improving the overall user experience. Because fine-tuned models are trained on specific data sets, they require fewer computational resources to generate accurate responses, leading to quicker turnaround times. This speed is crucial for real-time applications such as customer support chatbots, AI-driven analytics, and automated workflows.

- Brand Consistency

Custom LLMs ensure that responses align with an organization’s tone, style, and messaging guidelines. Fine-tuning allows businesses to reinforce brand identity across all AI-driven communications, whether maintaining a formal, professional voice in legal applications or adopting a friendly, engaging tone for customer interactions.

- Enhanced Security and Compliance

Industry regulations such as GDPR, HIPAA, or SOC 2 require strict data handling policies, making compliance a top priority for businesses leveraging AI. Custom LLMs can be trained to meet these regulatory standards by embedding compliance frameworks into the model. This helps prevent unauthorized data access, ensures privacy-sensitive information is handled correctly, and mitigates risks associated with regulatory violations.

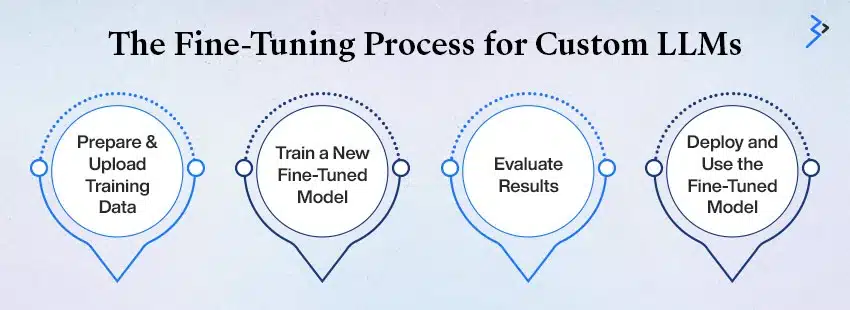

The Fine-Tuning Process for Custom LLMs

Fine-tuning an AI model to create a custom Large Language Model (LLM) involves a structured, multi-step process that ensures the model aligns with specific business needs. This process enhances accuracy, efficiency, and adaptability, making the AI model more suited to industry-specific applications. The fine-tuning process consists of four essential steps:

1. Prepare and Upload Training Data

The foundation of a well-trained custom LLM lies in high-quality, domain-specific training data. Preparing and uploading the correct dataset is crucial for achieving precise and relevant AI-generated outputs. Partnering with a Leading AI ML Development Company ensures that your data is properly structured, optimized, and leveraged to maximize the performance of your custom LLM.

Key Steps in Data Preparation:

- Collect Domain-Specific Data: Gather text data relevant to the industry, including customer interactions, reports, manuals, compliance documents, or knowledge bases

- Structure the Data: Format the dataset in a structured manner, ensuring it aligns with the LLM’s training requirements. Data should include real-world examples, questions, and expected responses

- Clean and Preprocess Data: Remove inconsistencies, redundant information, or errors to ensure data quality. Standardizing formatting and removing bias helps maintain model reliability

- Upload to Training Environment: Once the dataset is refined, it is uploaded to the fine-tuning environment, which will be used to train the base LLM

Example dataset format:

JSON

CopyEdit

{

“messages”: [

{“role”: “system,” “content”: “AI model trained for financial risk assessment.”},

{“role”: “user,” “content”: “How do you assess credit risk for small businesses?”},

{“role”: “assistant,” “content”: “Credit risk assessment involves evaluating financial statements, credit history, and market conditions to determine loan eligibility.”}

]

}

2. Train a New Fine-Tuned Model

Once the dataset is prepared and uploaded, the next step is to train the base LLM using this data. Training a fine-tuned model refines its responses by adjusting its internal weights based on the specific dataset provided.

Training Process:

- Define Training Objectives: Set clear goals for the fine-tuned model, such as improving accuracy in specific tasks, reducing response time, or maintaining a unique conversational tone.

- Run Training Iterations: The AI model undergoes multiple training cycles (epochs), gradually adjusting its parameters to optimize responses.

- Monitor Training Metrics: Track loss function values, accuracy rates, and response coherence during training to detect overfitting or underperformance.

- Optimize Training Performance: Fine-tune learning rates, adjust data weights and tweak model parameters to improve accuracy while minimizing computational costs.

3. Evaluate Results

Evaluating the custom LLM’s performance after training is crucial to ensure it meets expectations. This step involves rigorous testing and refinement.

Evaluation Strategies:

- Use a Test Dataset: A separate test dataset (not used during training) helps measure the model’s ability to generate accurate and meaningful responses.

- Assess Key Performance Metrics: Evaluate response quality using precision, recall, F1-score, perplexity (a measure of model uncertainty), and user satisfaction scores.

- Run Real-World Testing: Deploy the model in a controlled environment and interact with it using live queries to assess contextual accuracy.

- Identify Errors and Iterate: If the model provides inaccurate, irrelevant, or biased responses, refine the training data and retrain the model iteratively.

Example of performance analysis:

| Metric | Pre-Tuning Score | Post-Tuning Score |

| Accuracy | 78% | 92% |

| Response Latency | 1.5 sec | 1.1 sec |

| Compliance Rate | 85% | 98% |

4. Deploy and Use the Fine-Tuned Model

Once the fine-tuned LLM meets the required performance benchmarks, it is ready for deployment in real-world applications. A well-executed deployment ensures seamless integration, scalability, and ongoing optimization.

Deployment Strategies:

- Integrate with Business Systems: Connect the model to CRM platforms, chatbots, enterprise databases, virtual assistants, knowledge management systems, and other business applications to streamline automation and decision-making processes.

- API Deployment: Deploy the fine-tuned LLM via an API, allowing businesses to integrate it into web applications, mobile apps development services, You can use an OpenAI API endpoint provider, which offers the same compatibility and format as the official API but with simpler scaling and key management or cloud-based platforms for real-time accessibility and responsiveness.

- Edge and On-Premise Deployment: For businesses requiring enhanced security and compliance, deploy the LLM on private cloud infrastructure or on-premises servers to maintain data confidentiality and reduce reliance on external providers.

- Monitor Model Performance: Continuous monitoring is essential to ensure the model remains accurate and effective. Utilize tools like A/B testing, real-time feedback loops, and performance analytics dashboards to track response quality and user engagement.

- Refine and Update the Model: As user interactions evolve, periodic updates and retraining may be required. Incorporate new data, adjust parameters, and optimize responses to align the LLM with business objectives.

- Implement Security and Compliance Measures: Ensure the deployed LLM adheres to industry regulations (GDPR, HIPAA, SOC 2) by integrating data encryption, access control mechanisms, and bias detection frameworks to maintain trust and compliance.

By following these strategies, businesses can maximize the value of their custom LLMs, enhance operational efficiency, and deliver a more personalized AI-driven user experience.

Which Models Support Fine-Tuning?

OpenAI currently allows fine-tuning for several models, including:

- GPT-4o-2024-08-06

- gpt-4o-mini-2024-07-18

- GPT-4-0613

- GPT-3.5-turbo-0125

- GPT-3.5-turbo-1106

- GPT-3.5-turbo-0613

Fine-tuning is particularly useful for applications requiring nuanced language understanding, specific tone, or domain-specific knowledge.

When Should You Create a Custom LLM?

While fine-tuning enhances AI capabilities, creating a fully custom LLM is a strategic decision that depends on specific business needs and operational challenges. Developing a custom LLM is beneficial when:

- Maintaining Consistent Tone, Style, or Formatting: If existing models fail to align with brand voice, industry jargon, or internal documentation standards, a custom LLM ensures uniformity across all communications.

- Reducing Errors in Complex Prompts: When AI struggles with technical accuracy, domain-specific language, or nuanced responses, a custom model enhances precision and reliability.

- Handling Edge Cases Effectively: Businesses in regulated industries or specialized domains often encounter unique scenarios that require tailored AI responses beyond general-purpose models.

- Performing Highly Specialized Tasks: A custom LLM is ideal for scientific research, financial modeling, legal documentation analysis, and other specialized use cases requiring deep contextual understanding.

- Meeting Industry Regulations and Compliance Requirements: Certain industries, such as finance, healthcare, and legal sectors, require strict adherence to regulations like GDPR, HIPAA, and SOC 2. A custom LLM ensures compliance by enforcing data privacy, bias mitigation, and secure handling of sensitive information.

Before investing in a custom LLM, businesses should explore alternatives like prompt engineering, retrieval-augmented generation (RAG), and function calls, which can enhance AI performance without the cost and complexity of fine-tuning or model training.

Everyday Use Cases for Custom LLMs

Custom LLMs unlock new levels of efficiency and personalization across industries, offering tailored AI-driven solutions for specific business needs:

1. Customer Support Automation

- Enables personalized, context-aware responses in real-time, improving customer satisfaction

- Reduces workload on human agents by handling routine inquiries, ticket triaging, and multilingual support

- Ensures responses align with brand tone and policies, reducing inconsistent messaging

2. Legal and Financial Analysis

- Automates contract analysis, regulatory compliance checks, and risk assessments with high accuracy

- Extracts key clauses detects anomalies, and suggests legal interpretations for complex documents

- Enhances fraud detection and anti-money laundering efforts through pattern recognition

3. Healthcare Assistance

- Powers AI-driven medical consultations, diagnostic tools, and personalized treatment recommendations

- Supports healthcare professionals with evidence-based insights, clinical guidelines, and drug interaction analysis

- Ensures compliance with HIPAA and other patient data privacy regulations

4. E-commerce & Retail

- Enhances product discovery, AI-powered recommendations, and customer engagement

- Personalizes marketing campaigns using customer behavior analysis

- Automates inventory management and demand forecasting for better stock control

5. Content Generation & Marketing

- Generates industry-specific articles, reports, and marketing materials with precision

- Automates blog writing, SEO content optimization, and personalized email campaigns

- Supports multilingual content creation for global brands

6. Security and Compliance

- Detects potential compliance violations in legal documents, contracts, and policies

Automates incident reporting, fraud detection, and cyber threat analysis - Ensures bias mitigation, fairness auditing, and regulatory transparency in AI-generated outputs.

- By leveraging custom LLMs, businesses can optimize operations, enhance decision-making, and drive innovation while ensuring compliance, security, and superior user experiences.

Preparing Training Data for Custom LLMs

A well-structured dataset is critical for effective fine-tuning. Training data should:

- Include diverse conversation samples

- Cover both common and edge-case scenarios

- Contain high-quality responses to guide model behavior

- Maintain data security and privacy compliance

Example Dataset Format

{

“messages”: [

{“role”: “system,” “content”: “AI model trained for legal consultations.”},

{“role”: “user,” “content”: “What are the implications of a breach of contract?”},

{“role”: “assistant,” “content”: “A breach of contract may result in damages, specific performance, or termination of the agreement.”}

]

}

Multi-turn conversations can also be included, incorporating user interactions to refine model performance.

Training and Testing Splits

To ensure optimal fine-tuning, data should be split into:

- Training Set: Used to teach the model

- Test Set: Evaluates model performance after fine-tuning

Statistical analysis of test results provides insights into model improvements and necessary adjustments.

Token Limits and Cost Estimation

Fine-tuning costs vary based on model selection and training complexity. Token limits for common models are:

Model Inference Context Length Training Context Length

GPT-4o-mini 128,000 tokens 65,536 tokens

GPT-3.5-turbo 16,385 tokens 16,385 tokens

| Model Inference | Context Length | Training Context Length |

| GPT-4o-mini | 128,000 tokens | 65,536 tokens |

| GPT-3.5-turbo | 16,385 tokens | 16,385 tokens |

To estimate training costs:

(base training cost per 1M tokens / 1M) × total tokens × epochs

For example, fine-tuning GPT-4o-mini with 100,000 tokens over three epochs costs approximately $0.90.

Real-World Applications of Custom LLMs

Custom LLMs are transforming industries by delivering tailored AI solutions that enhance automation, decision-making, and efficiency. Below are some of the most impactful applications across various sectors:

1. E-commerce

- Personalized Product Recommendations: Custom LLMs analyze customer preferences, browsing behavior, and purchase history to provide highly accurate product suggestions, increasing conversion rates.

- AI-Driven Customer Support: Virtual assistants and chatbots handle inquiries, returns, and order tracking while maintaining brand-specific communication styles.

- Fraud Detection: AI models detect suspicious transactions and prevent payment fraud, identity theft, and account takeovers.

2. Healthcare

- AI-Powered Patient Consultation: Custom LLMs assist doctors by analyzing medical histories, symptoms, and test results to generate preliminary diagnoses and treatment plans.

- Medical Data Analysis: AI models process vast amounts of patient records, clinical research, and drug interactions, helping healthcare professionals make informed decisions.

- HIPAA-Compliant Chatbots: Secure AI chatbots answer patient queries, schedule appointments, and provide post-treatment guidance while ensuring compliance with data privacy regulations.

3. Finance

- Automated Risk Assessment: AI models analyze market trends, credit histories, and investment portfolios to assess risk and recommend financial strategies.

- Fraud Detection and Prevention: Custom LLMs detect unusual transaction patterns and suspicious activities in real time, reducing financial crime.

- Financial Forecasting: AI-driven predictive models help institutions make data-driven decisions by analyzing economic indicators, stock market trends, and investment risks.

4. Legal

- AI-Driven Contract Analysis: Custom LLMs extract key clauses, detect legal risks, and automate document reviews, improving efficiency for law firms.

- Compliance Checks: AI models ensure businesses adhere to industry regulations by monitoring policy updates and flagging non-compliance issues.

- Legal Research Automation: Custom AI systems scan case laws, statutes, and precedents, reducing legal professionals’ research time.

5. Manufacturing

- Supply Chain Optimization: AI-driven models enhance logistics planning, supplier management, and demand forecasting, reducing delays and costs.

- Predictive Maintenance: Custom LLMs analyze equipment performance data to predict potential failures, minimizing downtime and repair costs.

- Inventory Management: AI automates stock tracking, order replenishment, and warehouse management, improving operational efficiency

Conclusion

Custom LLMs offer unparalleled advantages for businesses seeking to enhance AI performance, efficiency, and cost-effectiveness. Organizations can ensure superior accuracy, compliance, and scalability by developing fine-tuned models tailored to industry-specific needs. Adopting custom LLMs will drive innovation and competitive differentiation across various sectors as AI technology evolves.

Related Articles

-

Self-Supervised Learning: The Secret Sauce Behind Smarter Generative AI

You hold a wealth of valuable information, customer conversations, engineering designs, and factory sensor data, yet much of it remains unorganized and unlabeled, limiting its potential. For years, building AI

-

Demystifying AI Agents: Capabilities, Use Cases, and Implementation

Artificial intelligence (AI) agents are becoming increasingly prevalent. They offer intelligent solutions for automating tasks, enhancing decision-making, and improving overall efficiency across various industries. In fact, the global AI market

-

Influence of Artificial Intelligence in the Changing the Face of Marketing

With continuous evolution in the technological world, people are eager to leverage each of them in all possible ways. And as a marketer, I am sure you also might have